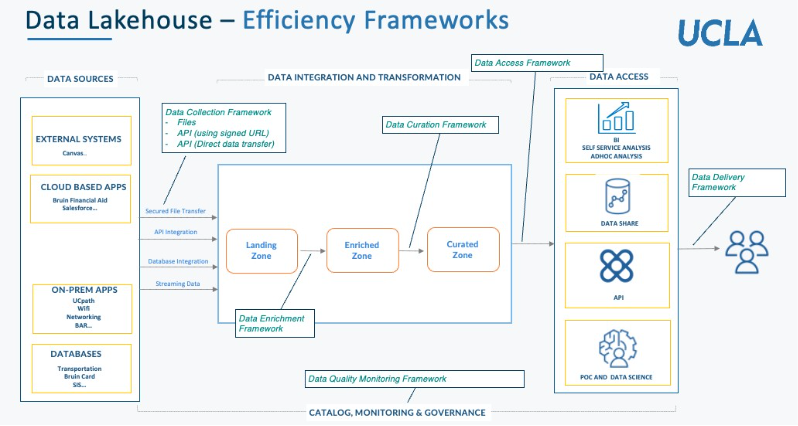

To ensure scalability with our data integration processes and reduce operational overhead, we have developed a suite of internal frameworks and automation tools that accelerate delivery and maintain consistency:

- Data Collection Framework: Standardized ingestion patterns and tools for reliably capturing data from internal and external sources.

- Data Enrichment Framework: Ensure raw data is reliably ingested, validated, and loaded into structured tables for downstream consumption & curation.

- Data Curation Framework: Transforming data through standardization, blending, aggregation, and business logic to create refined, analysis-ready datasets.

- Data Access Framework: Secure, role-based access frameworks that ensure governed exposure of data via APIs,

- Data Delivery Framework: Automated pipelines and delivery mechanisms to distribute data efficiently to consumers and systems.

- Data Quality Monitoring Framework: Declarative frameworks to define, validate, and monitor data quality across key datasets.

Below are the visual mapping different efficiency frameworks to reference architecture.

Data Lakehouse - Efficiency Frameworks